Java中伪造referer来爬取数据

时间:2022-12-14很多网站的防采集的办法,就是判断浏览器来源referer和cookie以及userAgent,道高一尺魔高一丈.

最近发现维护的一个爬虫应用,爬不到数据了,看了一下日志发现被爬网站做了防采集策略,经过定位后,发现被爬网站是针对referer做了验证,以下是解决方法:

在Java中获取一个网站的HTML内容可以通过HttpURLConnection来获取.我们在HttpURLConnection中可以设置referer来伪造referer,轻松绕过这类防采集的网站:

HttpURLConnection connection = null;

URL url = new URL(urlStr);

if (useProxy) {

Proxy proxy = ProxyServerUtil.getProxy();

connection = (HttpURLConnection) url.openConnection(proxy);

} else {

connection = (HttpURLConnection) url.openConnection();

}

connection.setRequestMethod( "POST");

connection.setRequestProperty("referer", "http://xxxx.xxx.com");

connection.addRequestProperty("User-Agent", ProxyServerUtil.getUserAgent());

connection.setConnectTimeout(10000);

connection.setReadTimeout(10000);

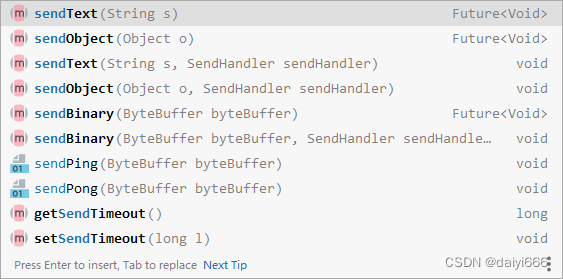

SpringBoot+WebSocket实现即时通讯的方法详解这篇文章主要为大家详细介绍了如何利用SpringBoot+WebSocket实现即时通讯功能,文中示例代码讲解详细,对我们学习或工作有一

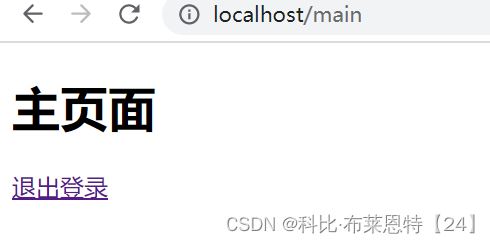

SpringBoot+WebSocket实现即时通讯的方法详解这篇文章主要为大家详细介绍了如何利用SpringBoot+WebSocket实现即时通讯功能,文中示例代码讲解详细,对我们学习或工作有一 Spring Security实现退出登录和退出处理器本文主要介绍了SpringSecurity实现退出登录和退出处理器,文中通过示例代码介绍的非常详细,对大家的学习或者工作具有一定

Spring Security实现退出登录和退出处理器本文主要介绍了SpringSecurity实现退出登录和退出处理器,文中通过示例代码介绍的非常详细,对大家的学习或者工作具有一定